mirror of

https://github.com/Websoft9/websoft9.git

synced 2024-11-21 15:10:22 +00:00

test docker build

This commit is contained in:

parent

f5a6573dde

commit

aa2f781fae

98 changed files with 6 additions and 5894 deletions

3

.github/workflows/media.yml

vendored

3

.github/workflows/media.yml

vendored

|

|

@ -1,3 +1,6 @@

|

||||||

|

# This action will be trigger by docker.yml action

|

||||||

|

# docker.yml action download the artifact for build

|

||||||

|

|

||||||

name: Build media for apphub image

|

name: Build media for apphub image

|

||||||

|

|

||||||

on:

|

on:

|

||||||

|

|

|

||||||

3

.gitignore

vendored

3

.gitignore

vendored

|

|

@ -6,4 +6,5 @@ logs

|

||||||

.pytest_cache

|

.pytest_cache

|

||||||

apphub/swagger-ui

|

apphub/swagger-ui

|

||||||

apphub/apphub.egg-info

|

apphub/apphub.egg-info

|

||||||

cli/__pycache__

|

cli/__pycache__

|

||||||

|

source

|

||||||

Binary file not shown.

Binary file not shown.

Binary file not shown.

|

|

@ -1,4 +1,4 @@

|

||||||

# modify time: 202312081656, you can modify here to trigger Docker Build action

|

# modify time: 202312081709, you can modify here to trigger Docker Build action

|

||||||

|

|

||||||

FROM python:3.10-slim-bullseye

|

FROM python:3.10-slim-bullseye

|

||||||

LABEL maintainer="Websoft9<help@websoft9.com>"

|

LABEL maintainer="Websoft9<help@websoft9.com>"

|

||||||

|

|

@ -16,18 +16,15 @@ RUN apt update && apt install -y --no-install-recommends curl git jq cron iprout

|

||||||

git clone --depth=1 $library_repo \

|

git clone --depth=1 $library_repo \

|

||||||

mv docker-library w9library && \

|

mv docker-library w9library && \

|

||||||

rm -rf w9library/.github && \

|

rm -rf w9library/.github && \

|

||||||

|

|

||||||

# Prepare media

|

# Prepare media

|

||||||

if [ ! -f ./media.zip ]; then \

|

if [ ! -f ./media.zip ]; then \

|

||||||

wget $websoft9_artifact/plugin/media/media-latest.zip -O ./media.zip && \

|

wget $websoft9_artifact/plugin/media/media-latest.zip -O ./media.zip && \

|

||||||

unzip media.zip \

|

unzip media.zip \

|

||||||

fi \

|

fi \

|

||||||

mv media* w9media \

|

mv media* w9media \

|

||||||

|

|

||||||

git clone --depth=1 https://github.com/swagger-api/swagger-ui.git && \

|

git clone --depth=1 https://github.com/swagger-api/swagger-ui.git && \

|

||||||

wget https://cdn.redoc.ly/redoc/latest/bundles/redoc.standalone.js && \

|

wget https://cdn.redoc.ly/redoc/latest/bundles/redoc.standalone.js && \

|

||||||

cp redoc.standalone.js swagger-ui/dist && \

|

cp redoc.standalone.js swagger-ui/dist && \

|

||||||

|

|

||||||

git clone --depth=1 $websoft9_repo ./w9source && \

|

git clone --depth=1 $websoft9_repo ./w9source && \

|

||||||

cp -r ./w9media ./media && \

|

cp -r ./w9media ./media && \

|

||||||

cp -r ./w9library ./library && \

|

cp -r ./w9library ./library && \

|

||||||

|

|

|

||||||

|

|

@ -1,126 +0,0 @@

|

||||||

## 0.8.30-rc1 release on 2023-11-16

|

|

||||||

1. improve all plugins githubaction

|

|

||||||

2. install plugin by shell

|

|

||||||

## 0.8.29 release on 2023-11-04

|

|

||||||

|

|

||||||

1. gitea,myapps,appstore update

|

|

||||||

2. apphub domains

|

|

||||||

3. apphub docs nginx config

|

|

||||||

|

|

||||||

## 0.8.28 release on 2023-11-01

|

|

||||||

|

|

||||||

1. improve dockerfile to reduce image size

|

|

||||||

2. fixed update_zip.sh

|

|

||||||

3. hide websoft9 containers

|

|

||||||

|

|

||||||

## 0.8.27 release on 2023-10-31

|

|

||||||

|

|

||||||

1. new websoft9 init

|

|

||||||

|

|

||||||

## 0.8.26 release on 2023-09-27

|

|

||||||

|

|

||||||

1. appmanage change to apphub

|

|

||||||

|

|

||||||

## 0.8.20 release on 2023-08-23

|

|

||||||

|

|

||||||

1. appmanage config files error:bug fix

|

|

||||||

|

|

||||||

## 0.8.19 release on 2023-08-23

|

|

||||||

|

|

||||||

1. New App Store preview push function added

|

|

||||||

2. Fix some known bugs

|

|

||||||

|

|

||||||

## 0.8.18 release on 2023-08-17

|

|

||||||

|

|

||||||

1. appmanage volumes bug edit

|

|

||||||

|

|

||||||

## 0.8.15 release on 2023-08-17

|

|

||||||

|

|

||||||

1. service menu bug

|

|

||||||

|

|

||||||

## 0.8.14 release on 2023-08-16

|

|

||||||

|

|

||||||

1. myapps plugins refresh bug

|

|

||||||

|

|

||||||

## 0.8.13 release on 2023-08-15

|

|

||||||

|

|

||||||

1. update plugins

|

|

||||||

2. fix bug data save in session

|

|

||||||

|

|

||||||

## 0.8.12 release on 2023-08-12

|

|

||||||

|

|

||||||

1. navigator plugin install way change

|

|

||||||

2. update plugin

|

|

||||||

|

|

||||||

## 0.8.11 release on 2023-08-03

|

|

||||||

|

|

||||||

1. Optimize interface calls

|

|

||||||

2. library artifacts directory: websoft9/plugin/library

|

|

||||||

3. add init apps: nocobase, affine

|

|

||||||

|

|

||||||

## 0.8.10 release on 2023-08-01

|

|

||||||

|

|

||||||

1. improve update.sh

|

|

||||||

2. add docs to artifacts

|

|

||||||

3. improve server's hostname

|

|

||||||

|

|

||||||

## 0.8.8 release on 2023-07-27

|

|

||||||

|

|

||||||

fixed update search api bug

|

|

||||||

|

|

||||||

## 0.8.5 release on 2023-07-26

|

|

||||||

|

|

||||||

add docs

|

|

||||||

|

|

||||||

## 0.8.4 release on 2023-07-26

|

|

||||||

|

|

||||||

add appstore search api

|

|

||||||

|

|

||||||

## 0.8.2 release on 2023-07-24

|

|

||||||

|

|

||||||

1. install from artifacts

|

|

||||||

2. add extre version.json into artifacts

|

|

||||||

|

|

||||||

## 0.7.2 release on 2023-06-25

|

|

||||||

|

|

||||||

1. appmanage 自动更新接口升级

|

|

||||||

|

|

||||||

## 0.7.1 release on 2023-06-21

|

|

||||||

|

|

||||||

1. appmanage version 文件意外删除时 bug 修改

|

|

||||||

2. 自动更新的时间频率调整为一天

|

|

||||||

3. 更新脚本 version 文件不存在的 bug 修改

|

|

||||||

|

|

||||||

## 0.7.0 release on 2023-06-20

|

|

||||||

|

|

||||||

1. appstore 增加 更新功能

|

|

||||||

2. myapps 功能优化

|

|

||||||

3. 新增 settings(设置) 功能

|

|

||||||

|

|

||||||

## 0.6.0 release on 2023-06-17

|

|

||||||

|

|

||||||

1. 上架 wordpress

|

|

||||||

2. 下架 moodle

|

|

||||||

3. 修改 redmine

|

|

||||||

4. 升级 discuzq,zabbix

|

|

||||||

5. 新增自动更新软件商店功能

|

|

||||||

|

|

||||||

## 0.4.0 release on 2023-06-15

|

|

||||||

|

|

||||||

1. owncloud 下线测试

|

|

||||||

|

|

||||||

## 0.3.0 release on 2023-06-06

|

|

||||||

|

|

||||||

1. appmanage docker 镜像更新到 0.3.0

|

|

||||||

2. 修复 prestashop 无法访问的 bug

|

|

||||||

3. 修复 odoo 无法安装的 bug

|

|

||||||

|

|

||||||

## 0.2.0 release on 2023-06-03

|

|

||||||

|

|

||||||

1. appmanage docker 镜像更新到 0.2.0

|

|

||||||

2. Portainer 插件修复自动登录 bug

|

|

||||||

3. My Apps 插件修复首次使用获取容器 bug

|

|

||||||

|

|

||||||

## 0.1.0 release on 2023-05-26

|

|

||||||

|

|

||||||

1. stackhub 预发布,基本功能实现

|

|

||||||

|

|

@ -1,68 +0,0 @@

|

||||||

# Contributing to Websoft9

|

|

||||||

|

|

||||||

From opening a bug report to creating a pull request: every contribution is appreciated and welcome.

|

|

||||||

|

|

||||||

If you're planning to implement a new feature or change the api please [create an issue](https://github.com/websoft9/websoft9/issues/new/choose) first. This way we can ensure that your precious work is not in vain.

|

|

||||||

|

|

||||||

|

|

||||||

## Not Sure Architecture?

|

|

||||||

|

|

||||||

It's important to figure out the design [architecture of Websoft9](docs/architecture.md)

|

|

||||||

|

|

||||||

## Fork

|

|

||||||

|

|

||||||

Contributor only allow to fork [main branch](https://github.com/Websoft9/websoft9/tree/main) and pull request for it. Maintainers don't accept any pr to **production branch**

|

|

||||||

|

|

||||||

## Branch

|

|

||||||

|

|

||||||

This repository have these branchs:

|

|

||||||

|

|

||||||

* **Contributor's branch**: Develpoer can fork main branch as their delelopment branch anytime

|

|

||||||

* **main branch**: The only branch that accepts PR from Contributors's branch

|

|

||||||

* **production branch**: For version release and don't permit modify directly, only merge PR from **main branch**

|

|

||||||

|

|

||||||

|

|

||||||

Flow: Contributor's branch → main branch → production branch

|

|

||||||

|

|

||||||

|

|

||||||

## Pull request

|

|

||||||

|

|

||||||

[Pull request](https://docs.github.com/pull-requests) let you tell others about changes you've pushed to a branch in a repository on GitHub.

|

|

||||||

|

|

||||||

#### When is PR produced?

|

|

||||||

|

|

||||||

* Contributor commit to main branch

|

|

||||||

* main branch commit to production branch

|

|

||||||

|

|

||||||

#### How to deal with PR?

|

|

||||||

|

|

||||||

1. [pull request reviews](https://docs.github.com/en/pull-requests/collaborating-with-pull-requests/reviewing-changes-in-pull-requests/about-pull-request-reviews)

|

|

||||||

2. Merge RP and CI/CD for it

|

|

||||||

|

|

||||||

## DevOps principle

|

|

||||||

|

|

||||||

DevOps thinks the same way **[5m1e](https://www.dgmfmoldclamps.com/what-is-5m1e-in-injection-molding-industry/)** for manufacturing companies

|

|

||||||

|

|

||||||

We follow the development principle of minimization, rapid release

|

|

||||||

|

|

||||||

### Version

|

|

||||||

|

|

||||||

Use *[[major].[minor].[patch]](https://semver.org/lang/zh-CN/)* for version serial number and [version.json](../version.json) for version dependencies

|

|

||||||

|

|

||||||

### Artifact

|

|

||||||

|

|

||||||

Websoft9 use below [Artifact](https://jfrog.com/devops-tools/article/what-is-a-software-artifact/) for different usage:

|

|

||||||

|

|

||||||

* **Dockerhub for image**: Access [Websoft9 docker images](https://hub.docker.com/u/websoft9dev) on Dockerhub

|

|

||||||

* **Azure Storage for files**: Access [packages list](https://artifact.azureedge.net/release?restype=container&comp=list) at [Azure Storage](https://learn.microsoft.com/en-us/azure/storage/storage-dotnet-how-to-use-blobs#list-the-blobs-in-a-container)

|

|

||||||

|

|

||||||

### Tags

|

|

||||||

|

|

||||||

- Type tags: Bug, enhancement, Documetation

|

|

||||||

- Stages Tags: PRD, Dev, QA(include deployment), Documentation

|

|

||||||

|

|

||||||

### WorkFlow

|

|

||||||

|

|

||||||

Websoft9 use the [Production branch with GitLab flow](https://cm-gitlab.stanford.edu/help/workflow/gitlab_flow.md#production-branch-with-gitlab-flow) for development collaboration

|

|

||||||

|

|

||||||

> [gitlab workflow](https://docs.gitlab.com/ee/topics/gitlab_flow.html) is improvement model for git

|

|

||||||

|

|

@ -1,167 +0,0 @@

|

||||||

This program is released under LGPL-3.0 and with the additional Terms:

|

|

||||||

Without authorization, it is not allowed to publish free or paid image based on this program in any Cloud platform's Marketplace.

|

|

||||||

|

|

||||||

GNU LESSER GENERAL PUBLIC LICENSE

|

|

||||||

Version 3, 29 June 2007

|

|

||||||

|

|

||||||

Copyright (C) 2007 Free Software Foundation, Inc. <https://fsf.org/>

|

|

||||||

Everyone is permitted to copy and distribute verbatim copies

|

|

||||||

of this license document, but changing it is not allowed.

|

|

||||||

|

|

||||||

This version of the GNU Lesser General Public License incorporates

|

|

||||||

the terms and conditions of version 3 of the GNU General Public

|

|

||||||

License, supplemented by the additional permissions listed below.

|

|

||||||

|

|

||||||

0. Additional Definitions.

|

|

||||||

|

|

||||||

As used herein, "this License" refers to version 3 of the GNU Lesser

|

|

||||||

General Public License, and the "GNU GPL" refers to version 3 of the GNU

|

|

||||||

General Public License.

|

|

||||||

|

|

||||||

"The Library" refers to a covered work governed by this License,

|

|

||||||

other than an Application or a Combined Work as defined below.

|

|

||||||

|

|

||||||

An "Application" is any work that makes use of an interface provided

|

|

||||||

by the Library, but which is not otherwise based on the Library.

|

|

||||||

Defining a subclass of a class defined by the Library is deemed a mode

|

|

||||||

of using an interface provided by the Library.

|

|

||||||

|

|

||||||

A "Combined Work" is a work produced by combining or linking an

|

|

||||||

Application with the Library. The particular version of the Library

|

|

||||||

with which the Combined Work was made is also called the "Linked

|

|

||||||

Version".

|

|

||||||

|

|

||||||

The "Minimal Corresponding Source" for a Combined Work means the

|

|

||||||

Corresponding Source for the Combined Work, excluding any source code

|

|

||||||

for portions of the Combined Work that, considered in isolation, are

|

|

||||||

based on the Application, and not on the Linked Version.

|

|

||||||

|

|

||||||

The "Corresponding Application Code" for a Combined Work means the

|

|

||||||

object code and/or source code for the Application, including any data

|

|

||||||

and utility programs needed for reproducing the Combined Work from the

|

|

||||||

Application, but excluding the System Libraries of the Combined Work.

|

|

||||||

|

|

||||||

1. Exception to Section 3 of the GNU GPL.

|

|

||||||

|

|

||||||

You may convey a covered work under sections 3 and 4 of this License

|

|

||||||

without being bound by section 3 of the GNU GPL.

|

|

||||||

|

|

||||||

2. Conveying Modified Versions.

|

|

||||||

|

|

||||||

If you modify a copy of the Library, and, in your modifications, a

|

|

||||||

facility refers to a function or data to be supplied by an Application

|

|

||||||

that uses the facility (other than as an argument passed when the

|

|

||||||

facility is invoked), then you may convey a copy of the modified

|

|

||||||

version:

|

|

||||||

|

|

||||||

a) under this License, provided that you make a good faith effort to

|

|

||||||

ensure that, in the event an Application does not supply the

|

|

||||||

function or data, the facility still operates, and performs

|

|

||||||

whatever part of its purpose remains meaningful, or

|

|

||||||

|

|

||||||

b) under the GNU GPL, with none of the additional permissions of

|

|

||||||

this License applicable to that copy.

|

|

||||||

|

|

||||||

3. Object Code Incorporating Material from Library Header Files.

|

|

||||||

|

|

||||||

The object code form of an Application may incorporate material from

|

|

||||||

a header file that is part of the Library. You may convey such object

|

|

||||||

code under terms of your choice, provided that, if the incorporated

|

|

||||||

material is not limited to numerical parameters, data structure

|

|

||||||

layouts and accessors, or small macros, inline functions and templates

|

|

||||||

(ten or fewer lines in length), you do both of the following:

|

|

||||||

|

|

||||||

a) Give prominent notice with each copy of the object code that the

|

|

||||||

Library is used in it and that the Library and its use are

|

|

||||||

covered by this License.

|

|

||||||

|

|

||||||

b) Accompany the object code with a copy of the GNU GPL and this license

|

|

||||||

document.

|

|

||||||

|

|

||||||

4. Combined Works.

|

|

||||||

|

|

||||||

You may convey a Combined Work under terms of your choice that,

|

|

||||||

taken together, effectively do not restrict modification of the

|

|

||||||

portions of the Library contained in the Combined Work and reverse

|

|

||||||

engineering for debugging such modifications, if you also do each of

|

|

||||||

the following:

|

|

||||||

|

|

||||||

a) Give prominent notice with each copy of the Combined Work that

|

|

||||||

the Library is used in it and that the Library and its use are

|

|

||||||

covered by this License.

|

|

||||||

|

|

||||||

b) Accompany the Combined Work with a copy of the GNU GPL and this license

|

|

||||||

document.

|

|

||||||

|

|

||||||

c) For a Combined Work that displays copyright notices during

|

|

||||||

execution, include the copyright notice for the Library among

|

|

||||||

these notices, as well as a reference directing the user to the

|

|

||||||

copies of the GNU GPL and this license document.

|

|

||||||

|

|

||||||

d) Do one of the following:

|

|

||||||

|

|

||||||

0) Convey the Minimal Corresponding Source under the terms of this

|

|

||||||

License, and the Corresponding Application Code in a form

|

|

||||||

suitable for, and under terms that permit, the user to

|

|

||||||

recombine or relink the Application with a modified version of

|

|

||||||

the Linked Version to produce a modified Combined Work, in the

|

|

||||||

manner specified by section 6 of the GNU GPL for conveying

|

|

||||||

Corresponding Source.

|

|

||||||

|

|

||||||

1) Use a suitable shared library mechanism for linking with the

|

|

||||||

Library. A suitable mechanism is one that (a) uses at run time

|

|

||||||

a copy of the Library already present on the user's computer

|

|

||||||

system, and (b) will operate properly with a modified version

|

|

||||||

of the Library that is interface-compatible with the Linked

|

|

||||||

Version.

|

|

||||||

|

|

||||||

e) Provide Installation Information, but only if you would otherwise

|

|

||||||

be required to provide such information under section 6 of the

|

|

||||||

GNU GPL, and only to the extent that such information is

|

|

||||||

necessary to install and execute a modified version of the

|

|

||||||

Combined Work produced by recombining or relinking the

|

|

||||||

Application with a modified version of the Linked Version. (If

|

|

||||||

you use option 4d0, the Installation Information must accompany

|

|

||||||

the Minimal Corresponding Source and Corresponding Application

|

|

||||||

Code. If you use option 4d1, you must provide the Installation

|

|

||||||

Information in the manner specified by section 6 of the GNU GPL

|

|

||||||

for conveying Corresponding Source.)

|

|

||||||

|

|

||||||

5. Combined Libraries.

|

|

||||||

|

|

||||||

You may place library facilities that are a work based on the

|

|

||||||

Library side by side in a single library together with other library

|

|

||||||

facilities that are not Applications and are not covered by this

|

|

||||||

License, and convey such a combined library under terms of your

|

|

||||||

choice, if you do both of the following:

|

|

||||||

|

|

||||||

a) Accompany the combined library with a copy of the same work based

|

|

||||||

on the Library, uncombined with any other library facilities,

|

|

||||||

conveyed under the terms of this License.

|

|

||||||

|

|

||||||

b) Give prominent notice with the combined library that part of it

|

|

||||||

is a work based on the Library, and explaining where to find the

|

|

||||||

accompanying uncombined form of the same work.

|

|

||||||

|

|

||||||

6. Revised Versions of the GNU Lesser General Public License.

|

|

||||||

|

|

||||||

The Free Software Foundation may publish revised and/or new versions

|

|

||||||

of the GNU Lesser General Public License from time to time. Such new

|

|

||||||

versions will be similar in spirit to the present version, but may

|

|

||||||

differ in detail to address new problems or concerns.

|

|

||||||

|

|

||||||

Each version is given a distinguishing version number. If the

|

|

||||||

Library as you received it specifies that a certain numbered version

|

|

||||||

of the GNU Lesser General Public License "or any later version"

|

|

||||||

applies to it, you have the option of following the terms and

|

|

||||||

conditions either of that published version or of any later version

|

|

||||||

published by the Free Software Foundation. If the Library as you

|

|

||||||

received it does not specify a version number of the GNU Lesser

|

|

||||||

General Public License, you may choose any version of the GNU Lesser

|

|

||||||

General Public License ever published by the Free Software Foundation.

|

|

||||||

|

|

||||||

If the Library as you received it specifies that a proxy can decide

|

|

||||||

whether future versions of the GNU Lesser General Public License shall

|

|

||||||

apply, that proxy's public statement of acceptance of any version is

|

|

||||||

permanent authorization for you to choose that version for the

|

|

||||||

Library.

|

|

||||||

|

|

@ -1,72 +0,0 @@

|

||||||

[](http://www.gnu.org/licenses/gpl-3.0)

|

|

||||||

[](https://github.com/websoft9/websoft9)

|

|

||||||

[](https://github.com/websoft9/websoft9)

|

|

||||||

[](https://github.com/websoft9/websoft9)

|

|

||||||

|

|

||||||

# What is Websoft9?

|

|

||||||

|

|

||||||

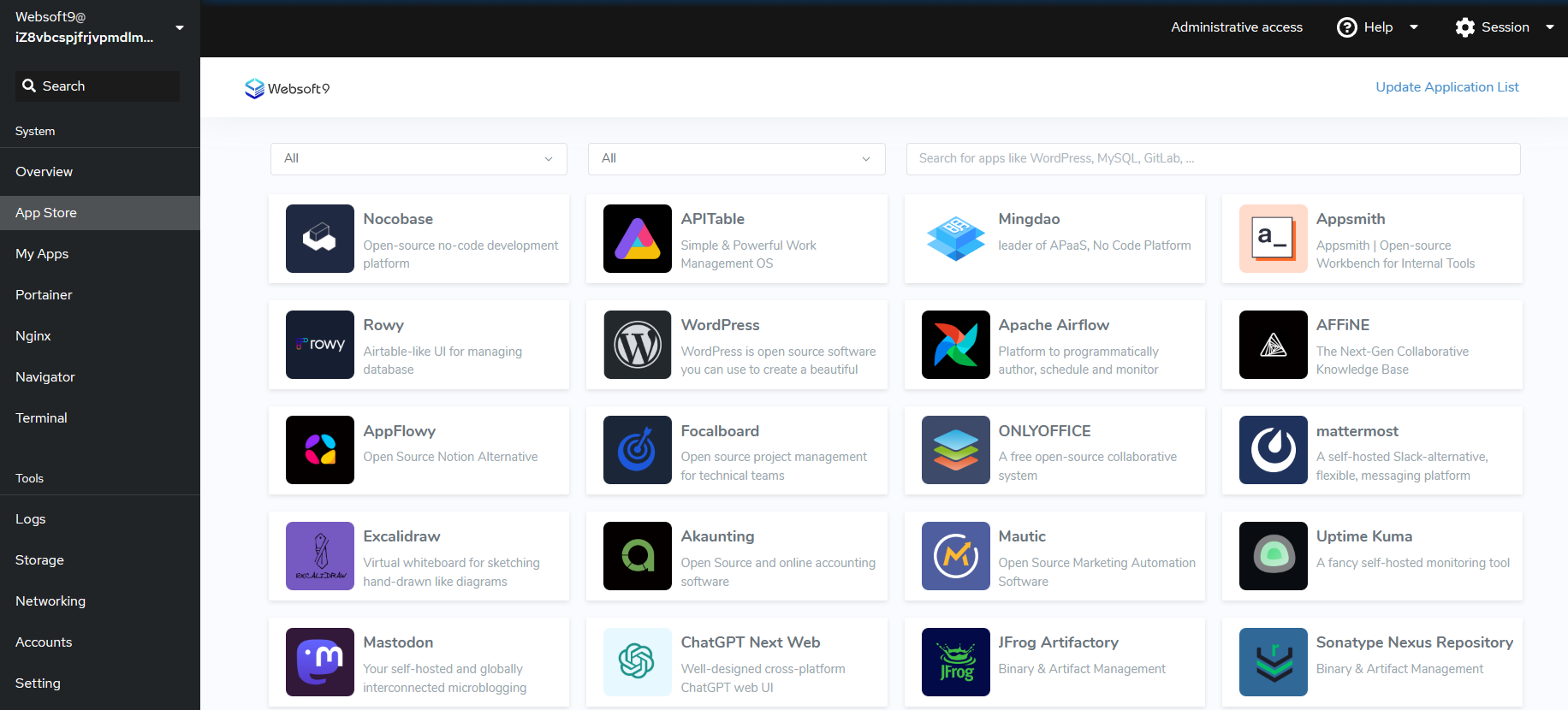

Websoft9 is web-based PaaS platform for running 200+ hot [open source application](https://github.com/Websoft9/docker-library/tree/main/apps) on your own server.

|

|

||||||

|

|

||||||

Websoft9 help you running multiple applications in a single server, that means we believe Microservices on single machine is reasonable. On the contrary, it becomes more and more valuable as computing power increases

|

|

||||||

|

|

||||||

Although the Cloud Native emphasizes high availability and clustering, but most of the time, applications do not need to implement complex clusters or K8S.

|

|

||||||

|

|

||||||

Websoft9's [architecture](https://github.com/Websoft9/websoft9/blob/main/docs/architecture.md) is simple, it did not create any new technology stack, and we fully utilize popular technology components to achieve our product goals, allowing users and developers to participate in our projects without the need to learn new technologies.

|

|

||||||

|

|

||||||

## Demos

|

|

||||||

|

|

||||||

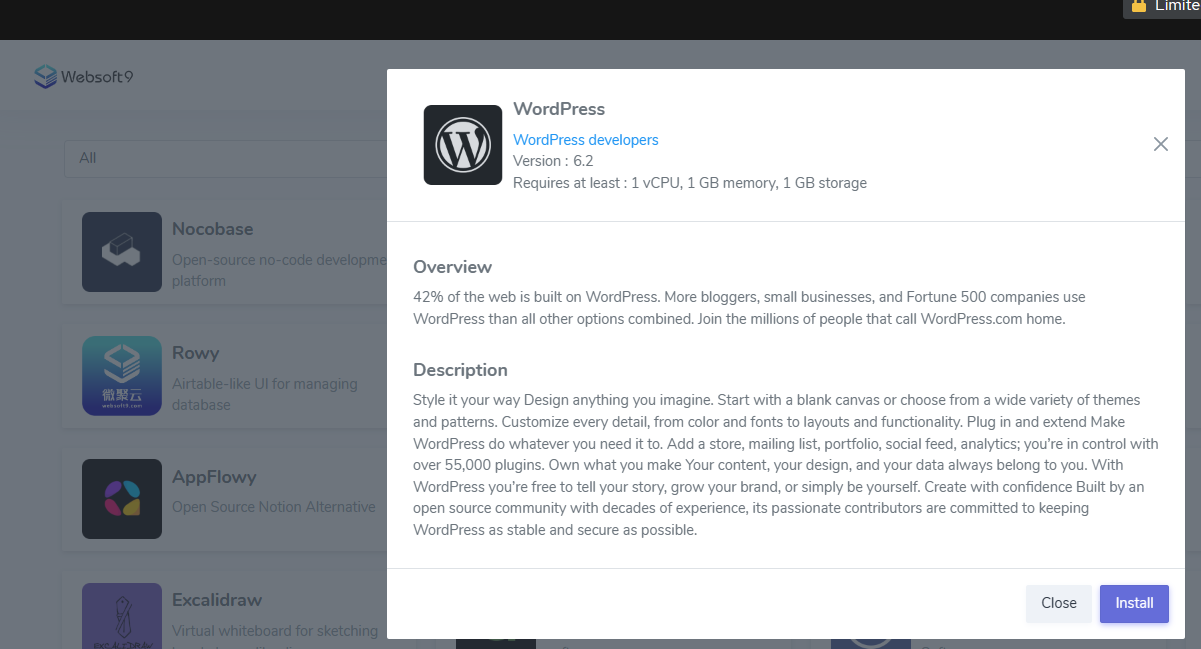

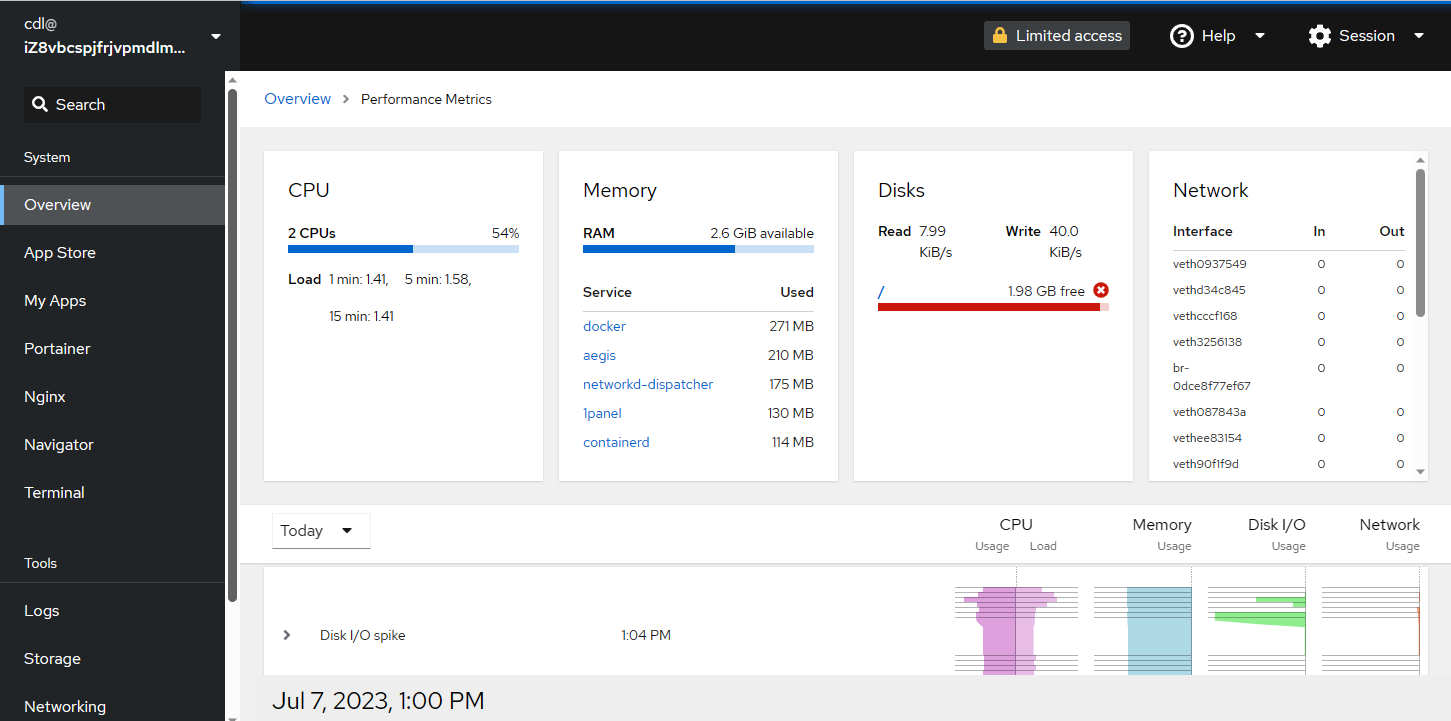

You can see the sceenshoots below:

|

|

||||||

|

|

||||||

|  |  |  |

|

|

||||||

| --------------------------------------------------------------------------------------------------- | --------------------------------------------------------------------------------------------------- | --------------------------------------------------------------------------------------------------- |

|

|

||||||

|  |  |  |

|

|

||||||

|

|

||||||

## Features

|

|

||||||

|

|

||||||

- Applications listing

|

|

||||||

- Install 200+ template applications without any configuration

|

|

||||||

- Web-based file browser to manage files and folder

|

|

||||||

- Manage user accounts

|

|

||||||

- Use a terminal on a remote server in your local web browser

|

|

||||||

- Nginx gui for proxy and free SSL with Let's Encrypt

|

|

||||||

- Deploy, configure, troubleshoot and secure containers in minutes on Kubernetes, Docker, and Swarm in any data center, cloud, network edge or IIOT device.

|

|

||||||

- Manage your Linux by GUI: Inspect and change network settings, Configure a firewall, Manage storage, Browse and search system logs, Inspect a system’s hardware, Inspect and interact with systemd-based services,

|

|

||||||

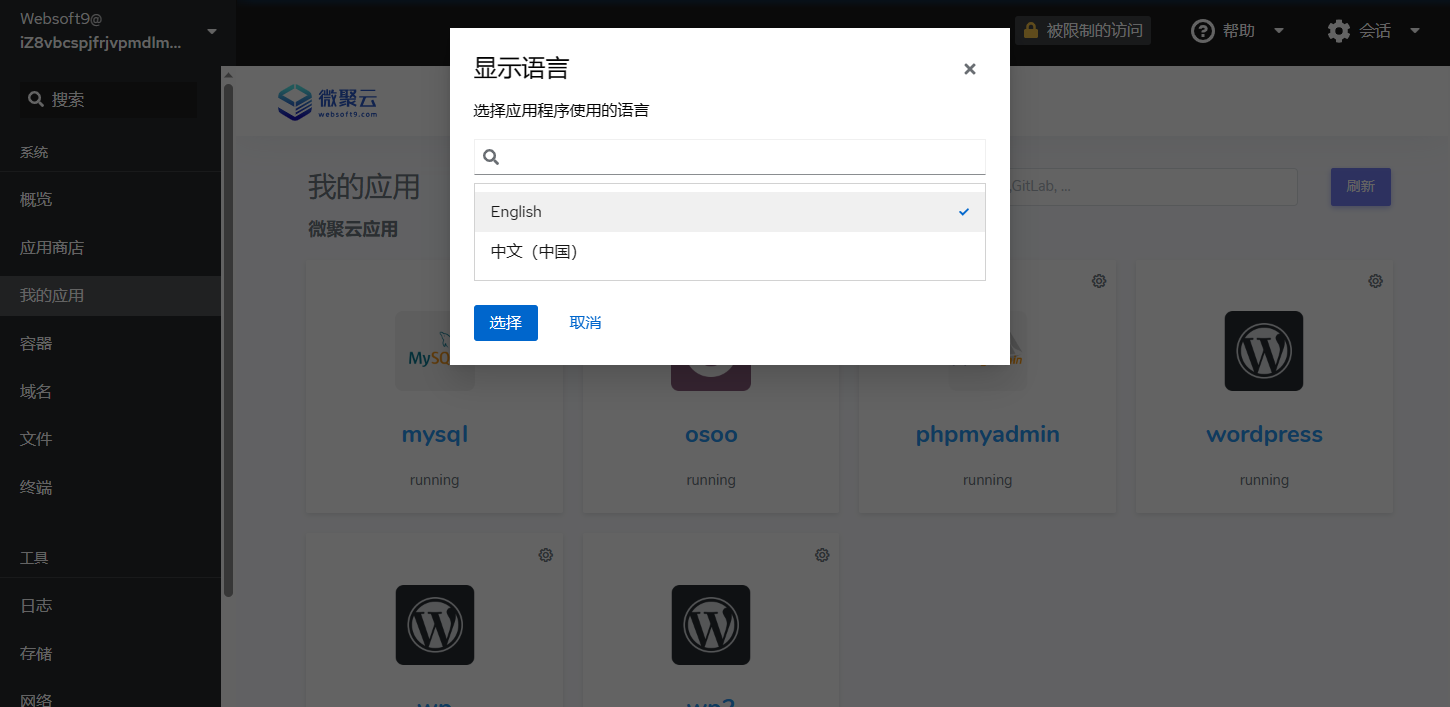

- Supported languages: English, Chinese(中文)

|

|

||||||

|

|

||||||

# Installation

|

|

||||||

|

|

||||||

You should have root privileges user to install or upgrade or uninstall Websoft9, if you use no-root user you can `sudo su` for it

|

|

||||||

|

|

||||||

## Install & Upgrade

|

|

||||||

|

|

||||||

```

|

|

||||||

# Install by default

|

|

||||||

wget -O install.sh https://websoft9.github.io/websoft9/install/install.sh && bash install.sh

|

|

||||||

|

|

||||||

|

|

||||||

# Install Websoft9 with parameters

|

|

||||||

wget -O install.sh https://websoft9.github.io/websoft9/install/install.sh && bash install.sh --port 9000 --channel release --path "/data/websoft9/source" --version "latest"

|

|

||||||

```

|

|

||||||

After installation, access it by: **http://Internet IP:9000** and using **Linux user** for login

|

|

||||||

|

|

||||||

## Uninstall

|

|

||||||

|

|

||||||

```

|

|

||||||

# Uninstall by default

|

|

||||||

curl https://websoft9.github.io/websoft9/install/uninstall.sh | bash

|

|

||||||

|

|

||||||

# Uninstall all

|

|

||||||

wget -O - https://websoft9.github.io/websoft9/install/uninstall.sh | bash /dev/stdin --cockpit --files

|

|

||||||

```

|

|

||||||

|

|

||||||

# Contributing

|

|

||||||

|

|

||||||

Follow the [contributing guidelines](CONTRIBUTING.md) if you want to propose a change in the Websoft9 core. For more information about participating in the community and contributing to the Websoft9 project, see [this page](https://support.websoft9.com/docs/community/contributing).

|

|

||||||

|

|

||||||

- Documentation for [Websoft9 core maintainers](docs/MAINTAINERS.md)

|

|

||||||

- Documentation for application templates based on Docker maintainers is in the [docker-library](https://github.com/Websoft9/docker-library).

|

|

||||||

- [Articles promoting Websoft9](https://github.com/Websoft9/websoft9/issues/327)

|

|

||||||

|

|

||||||

# License

|

|

||||||

|

|

||||||

Websoft9 is licensed under the [LGPL-3.0](/License.md), and additional Terms: It is not allowed to publish free or paid image based on this repository in any Cloud platform's Marketplace without authorization

|

|

||||||

|

|

@ -1,15 +0,0 @@

|

||||||

# Security Policy

|

|

||||||

|

|

||||||

## Versions

|

|

||||||

|

|

||||||

As an open source product, we will only patch the latest major version for security vulnerabilities. Previous versions of Websoft9 will not be retroactively patched.

|

|

||||||

|

|

||||||

## Disclosing

|

|

||||||

|

|

||||||

You can get in touch with us regarding a vulnerability via [issue](https://github.com/Websoft9/websoft9/issues) or email at help@websoft9.com.

|

|

||||||

|

|

||||||

You can also disclose via huntr.dev. If you believe you have found a vulnerability, please disclose it on huntr and let us know.

|

|

||||||

|

|

||||||

https://huntr.dev/bounties/disclose

|

|

||||||

|

|

||||||

This will enable us to review the vulnerability and potentially reward you for your work.

|

|

||||||

|

|

@ -1,2 +0,0 @@

|

||||||

1. improve all plugins githubaction

|

|

||||||

2. install plugin by shell

|

|

||||||

|

|

@ -1,24 +0,0 @@

|

||||||

# Cockpit

|

|

||||||

|

|

||||||

Cockpit is used for backend service gatway, we have not modify Cockpit core, just improve the installation and modify config for Websoft9

|

|

||||||

|

|

||||||

## Install

|

|

||||||

|

|

||||||

```

|

|

||||||

# default install

|

|

||||||

wget https://websoft9.github.io/websoft9/install/install_cockpit.sh && bash install_cockpit.sh

|

|

||||||

|

|

||||||

# define Cockpit port and install

|

|

||||||

wget https://websoft9.github.io/websoft9/install/install_cockpit.sh && bash install_cockpit.sh --port 9099

|

|

||||||

```

|

|

||||||

|

|

||||||

## Development

|

|

||||||

|

|

||||||

Developer should improve these codes:

|

|

||||||

|

|

||||||

- Install and Upgrade Cockpit: */install/install_cockpit.sh*

|

|

||||||

|

|

||||||

- Override the default menus: */cockpit/menu_override*

|

|

||||||

> shell.override.json is used for Top menu of Cockpit。Override function until Cockpit 297

|

|

||||||

|

|

||||||

- Cockipt configuration file: */cockpit/cockpit.conf*

|

|

||||||

|

|

@ -1,5 +0,0 @@

|

||||||

# docs: https://cockpit-project.org/guide/latest/cockpit.conf.5.html

|

|

||||||

|

|

||||||

[WebService]

|

|

||||||

AllowUnencrypted = true

|

|

||||||

LoginTitle= Websoft9 - Linux AppStore

|

|

||||||

|

|

@ -1,3 +0,0 @@

|

||||||

{

|

|

||||||

"tools": null

|

|

||||||

}

|

|

||||||

|

|

@ -1,3 +0,0 @@

|

||||||

{

|

|

||||||

"tools": null

|

|

||||||

}

|

|

||||||

|

|

@ -1,3 +0,0 @@

|

||||||

{

|

|

||||||

"menu": null

|

|

||||||

}

|

|

||||||

|

|

@ -1,57 +0,0 @@

|

||||||

{

|

|

||||||

"menu": null,

|

|

||||||

"tools": {

|

|

||||||

"index": {

|

|

||||||

"label": "Networking",

|

|

||||||

"order": 40,

|

|

||||||

"docs": [

|

|

||||||

{

|

|

||||||

"label": "Managing networking bonds",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/configuring-network-bonds-using-the-web-console_system-management-using-the-rhel-8-web-console"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"label": "Managing networking teams",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/configuring-network-teams-using-the-web-console_system-management-using-the-rhel-8-web-console"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"label": "Managing networking bridges",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/configuring-network-bridges-in-the-web-console_system-management-using-the-rhel-8-web-console"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"label": "Managing VLANs",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/configuring-vlans-in-the-web-console_system-management-using-the-rhel-8-web-console"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"label": "Managing firewall",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/managing_firewall_using_the_web_console"

|

|

||||||

}

|

|

||||||

],

|

|

||||||

"keywords": [

|

|

||||||

{

|

|

||||||

"matches": [

|

|

||||||

"network",

|

|

||||||

"interface",

|

|

||||||

"bridge",

|

|

||||||

"vlan",

|

|

||||||

"bond",

|

|

||||||

"team",

|

|

||||||

"port",

|

|

||||||

"mac",

|

|

||||||

"ipv4",

|

|

||||||

"ipv6"

|

|

||||||

]

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"matches": [

|

|

||||||

"firewall",

|

|

||||||

"firewalld",

|

|

||||||

"zone",

|

|

||||||

"tcp",

|

|

||||||

"udp"

|

|

||||||

],

|

|

||||||

"goto": "/network/firewall"

|

|

||||||

}

|

|

||||||

]

|

|

||||||

}

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

@ -1,3 +0,0 @@

|

||||||

{

|

|

||||||

"tools": null

|

|

||||||

}

|

|

||||||

|

|

@ -1,3 +0,0 @@

|

||||||

{

|

|

||||||

"tools": null

|

|

||||||

}

|

|

||||||

|

|

@ -1,3 +0,0 @@

|

||||||

{

|

|

||||||

"menu": null

|

|

||||||

}

|

|

||||||

|

|

@ -1,29 +0,0 @@

|

||||||

{

|

|

||||||

"locales": {

|

|

||||||

"cs-cz": null,

|

|

||||||

"de-de": null,

|

|

||||||

"es-es": null,

|

|

||||||

"fi-fi": null,

|

|

||||||

"fr-fr": null,

|

|

||||||

"he-il": null,

|

|

||||||

"it-it": null,

|

|

||||||

"ja-jp": null,

|

|

||||||

"ka-ge": null,

|

|

||||||

"ko-kr": null,

|

|

||||||

"nb-no": null,

|

|

||||||

"nl-nl": null,

|

|

||||||

"pl-pl": null,

|

|

||||||

"pt-br": null,

|

|

||||||

"ru-ru": null,

|

|

||||||

"sk-sk": null,

|

|

||||||

"sv-se": null,

|

|

||||||

"tr-tr": null,

|

|

||||||

"uk-ua": null

|

|

||||||

},

|

|

||||||

"docs": [

|

|

||||||

{

|

|

||||||

"label": "Documentation",

|

|

||||||

"url": "https://support.websoft9.com/en/docs/"

|

|

||||||

}

|

|

||||||

]

|

|

||||||

}

|

|

||||||

|

|

@ -1,3 +0,0 @@

|

||||||

{

|

|

||||||

"tools": null

|

|

||||||

}

|

|

||||||

|

|

@ -1,69 +0,0 @@

|

||||||

{

|

|

||||||

"menu": null,

|

|

||||||

"tools": {

|

|

||||||

"index": {

|

|

||||||

"label": "Storage",

|

|

||||||

"order": 30,

|

|

||||||

"docs": [

|

|

||||||

{

|

|

||||||

"label": "Managing partitions",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/managing-partitions-using-the-web-console_system-management-using-the-rhel-8-web-console"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"label": "Managing NFS mounts",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/managing-nfs-mounts-in-the-web-console_system-management-using-the-rhel-8-web-console"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"label": "Managing RAIDs",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/managing-redundant-arrays-of-independent-disks-in-the-web-console_system-management-using-the-rhel-8-web-console"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"label": "Managing LVMs",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/using-the-web-console-for-configuring-lvm-logical-volumes_system-management-using-the-rhel-8-web-console"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"label": "Managing physical drives",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/using-the-web-console-for-changing-physical-drives-in-volume-groups_system-management-using-the-rhel-8-web-console"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"label": "Managing VDOs",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/using-the-web-console-for-managing-virtual-data-optimizer-volumes_system-management-using-the-rhel-8-web-console"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"label": "Using LUKS encryption",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/locking-data-with-luks-password-in-the-rhel-web-console_system-management-using-the-rhel-8-web-console"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"label": "Using Tang server",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/configuring-automated-unlocking-using-a-tang-key-in-the-web-console_system-management-using-the-rhel-8-web-console"

|

|

||||||

}

|

|

||||||

],

|

|

||||||

"keywords": [

|

|

||||||

{

|

|

||||||

"matches": [

|

|

||||||

"filesystem",

|

|

||||||

"partition",

|

|

||||||

"nfs",

|

|

||||||

"raid",

|

|

||||||

"volume",

|

|

||||||

"disk",

|

|

||||||

"vdo",

|

|

||||||

"iscsi",

|

|

||||||

"drive",

|

|

||||||

"mount",

|

|

||||||

"unmount",

|

|

||||||

"udisks",

|

|

||||||

"mkfs",

|

|

||||||

"format",

|

|

||||||

"fstab",

|

|

||||||

"lvm2",

|

|

||||||

"luks",

|

|

||||||

"encryption",

|

|

||||||

"nbde",

|

|

||||||

"tang"

|

|

||||||

]

|

|

||||||

}

|

|

||||||

]

|

|

||||||

}

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

@ -1,3 +0,0 @@

|

||||||

{

|

|

||||||

"tools": null

|

|

||||||

}

|

|

||||||

|

|

@ -1,147 +0,0 @@

|

||||||

{

|

|

||||||

"tools": {

|

|

||||||

"terminal": null,

|

|

||||||

"services": {

|

|

||||||

"label": "Services",

|

|

||||||

"order": 10,

|

|

||||||

"docs": [

|

|

||||||

{

|

|

||||||

"label": "Managing services",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/managing-services-in-the-web-console_system-management-using-the-rhel-8-web-console"

|

|

||||||

}

|

|

||||||

],

|

|

||||||

"keywords": [

|

|

||||||

{

|

|

||||||

"matches": [

|

|

||||||

"service",

|

|

||||||

"systemd",

|

|

||||||

"target",

|

|

||||||

"socket",

|

|

||||||

"timer",

|

|

||||||

"path",

|

|

||||||

"unit",

|

|

||||||

"systemctl"

|

|

||||||

]

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"matches": [

|

|

||||||

"boot",

|

|

||||||

"mask",

|

|

||||||

"unmask",

|

|

||||||

"restart",

|

|

||||||

"enable",

|

|

||||||

"disable"

|

|

||||||

],

|

|

||||||

"weight": 1

|

|

||||||

}

|

|

||||||

]

|

|

||||||

},

|

|

||||||

"logs": {

|

|

||||||

"label": "Logs",

|

|

||||||

"order": 20,

|

|

||||||

"docs": [

|

|

||||||

{

|

|

||||||

"label": "Reviewing logs",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/reviewing-logs_system-management-using-the-rhel-8-web-console"

|

|

||||||

}

|

|

||||||

],

|

|

||||||

"keywords": [

|

|

||||||

{

|

|

||||||

"matches": [

|

|

||||||

"journal",

|

|

||||||

"warning",

|

|

||||||

"error",

|

|

||||||

"debug"

|

|

||||||

]

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"matches": [

|

|

||||||

"abrt",

|

|

||||||

"crash",

|

|

||||||

"coredump"

|

|

||||||

],

|

|

||||||

"goto": "?tag=abrt-notification"

|

|

||||||

}

|

|

||||||

]

|

|

||||||

}

|

|

||||||

},

|

|

||||||

"menu": {

|

|

||||||

"logs": null,

|

|

||||||

"services": null,

|

|

||||||

"index": {

|

|

||||||

"label": "Overview",

|

|

||||||

"order": -2,

|

|

||||||

"docs": [

|

|

||||||

{

|

|

||||||

"label": "Configuring system settings",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/getting-started-with-the-rhel-8-web-console_system-management-using-the-rhel-8-web-console"

|

|

||||||

}

|

|

||||||

],

|

|

||||||

"keywords": [

|

|

||||||

{

|

|

||||||

"matches": [

|

|

||||||

"time",

|

|

||||||

"date",

|

|

||||||

"restart",

|

|

||||||

"shut",

|

|

||||||

"domain",

|

|

||||||

"machine",

|

|

||||||

"operating system",

|

|

||||||

"os",

|

|

||||||

"asset tag",

|

|

||||||

"ssh",

|

|

||||||

"power",

|

|

||||||

"version",

|

|

||||||

"host"

|

|

||||||

]

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"matches": [

|

|

||||||

"hardware",

|

|

||||||

"mitigation",

|

|

||||||

"pci",

|

|

||||||

"memory",

|

|

||||||

"cpu",

|

|

||||||

"bios",

|

|

||||||

"ram",

|

|

||||||

"dimm",

|

|

||||||

"serial"

|

|

||||||

],

|

|

||||||

"goto": "/system/hwinfo"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"matches": [

|

|

||||||

"graphs",

|

|

||||||

"metrics",

|

|

||||||

"history",

|

|

||||||

"pcp",

|

|

||||||

"cpu",

|

|

||||||

"memory",

|

|

||||||

"disks",

|

|

||||||

"network",

|

|

||||||

"cgroups",

|

|

||||||

"performance"

|

|

||||||

],

|

|

||||||

"goto": "/metrics"

|

|

||||||

}

|

|

||||||

]

|

|

||||||

},

|

|

||||||

"terminal": {

|

|

||||||

"label": "Terminal",

|

|

||||||

"keywords": [

|

|

||||||

{

|

|

||||||

"matches": [

|

|

||||||

"console",

|

|

||||||

"command",

|

|

||||||

"bash",

|

|

||||||

"shell"

|

|

||||||

]

|

|

||||||

}

|

|

||||||

]

|

|

||||||

}

|

|

||||||

},

|

|

||||||

"preload": [

|

|

||||||

"index"

|

|

||||||

],

|

|

||||||

"content-security-policy": "img-src 'self' data:"

|

|

||||||

}

|

|

||||||

|

|

@ -1,3 +0,0 @@

|

||||||

{

|

|

||||||

"tools": null

|

|

||||||

}

|

|

||||||

|

|

@ -1,31 +0,0 @@

|

||||||

{

|

|

||||||

"menu": null,

|

|

||||||

"tools": {

|

|

||||||

"index": {

|

|

||||||

"label": "Accounts",

|

|

||||||

"order": 70,

|

|

||||||

"docs": [

|

|

||||||

{

|

|

||||||

"label": "Managing user accounts",

|

|

||||||

"url": "https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/managing_systems_using_the_rhel_8_web_console/managing-user-accounts-in-the-web-console_system-management-using-the-rhel-8-web-console"

|

|

||||||

}

|

|

||||||

],

|

|

||||||

"keywords": [

|

|

||||||

{

|

|

||||||

"matches": [

|

|

||||||

"user",

|

|

||||||

"password",

|

|

||||||

"useradd",

|

|

||||||

"passwd",

|

|

||||||

"username",

|

|

||||||

"login",

|

|

||||||

"access",

|

|

||||||

"roles",

|

|

||||||

"ssh",

|

|

||||||

"keys"

|

|

||||||

]

|

|

||||||

}

|

|

||||||

]

|

|

||||||

}

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

@ -1,4 +0,0 @@

|

||||||

git clone --depth=1 https://github.com/Websoft9/websoft9.git

|

|

||||||

rm -rf /etc/cockpit/*.override.json

|

|

||||||

cp -r websoft9/cockpit/menu_override/* /etc/cockpit

|

|

||||||

rm -rf websoft9

|

|

||||||

|

|

@ -1,4 +0,0 @@

|

||||||

APPHUB_VERSION=0.0.6

|

|

||||||

DEPLOYMENT_VERSION=2.19.0

|

|

||||||

GIT_VERSION=1.20.4

|

|

||||||

PROXY_VERSION=2.10.4

|

|

||||||

|

|

@ -1,28 +0,0 @@

|

||||||

# Docker

|

|

||||||

|

|

||||||

## Test it

|

|

||||||

|

|

||||||

All backend services of Websoft9 is packaged to Docker image, just these steps you can running them:

|

|

||||||

|

|

||||||

```

|

|

||||||

curl -fsSL https://get.docker.com -o get-docker.sh && sh get-docker.sh && sudo systemctl enable docker && sudo systemctl start docker

|

|

||||||

sudo docker network create websoft9

|

|

||||||

wget https://websoft9.github.io/websoft9/docker/.env

|

|

||||||

wget https://websoft9.github.io/websoft9/docker/docker-compose.yml

|

|

||||||

sudo docker compose -p websoft9 up -d

|

|

||||||

```

|

|

||||||

> If you want only want to change to development, you should execute following commands:

|

|

||||||

```

|

|

||||||

sudo docker compose -p websoft9 down -v

|

|

||||||

wget https://websoft9.github.io/websoft9/docker/docker-compose-dev.yml

|

|

||||||

# /data/source is development sources path in host

|

|

||||||

docker compose -f docker-compose-dev.yml -p websoft9 up -d --build

|

|

||||||

```

|

|

||||||

|

|

||||||

## Develop it

|

|

||||||

|

|

||||||

The folder **apphub, deployment, git, proxy** stored development files, and used for:

|

|

||||||

|

|

||||||

- Optimize dockerfile

|

|

||||||

- Release version

|

|

||||||

- Build docker image by Githuh action

|

|

||||||

|

|

@ -1,59 +0,0 @@

|

||||||

# modify time: 202311131740, you can modify here to trigger Docker Build action

|

|

||||||

|

|

||||||

FROM python:3.10-slim-bullseye

|

|

||||||

LABEL maintainer="Websoft9<help@websoft9.com>"

|

|

||||||

LABEL version="0.0.6"

|

|

||||||

|

|

||||||

WORKDIR /websoft9

|

|

||||||

|

|

||||||

ENV LIBRARY_VERSION=0.5.8

|

|

||||||

ENV MEDIA_VERSION=0.0.3

|

|

||||||

ENV websoft9_repo="https://github.com/Websoft9/websoft9"

|

|

||||||

ENV docker_library_repo="https://github.com/Websoft9/docker-library"

|

|

||||||

ENV media_repo="https://github.com/Websoft9/media"

|

|

||||||

ENV source_github_pages="https://websoft9.github.io/websoft9"

|

|

||||||

|

|

||||||

RUN apt update && apt install -y --no-install-recommends curl git jq cron iproute2 supervisor rsync wget unzip zip && \

|

|

||||||

# Prepare source files

|

|

||||||

wget $docker_library_repo/archive/refs/tags/$LIBRARY_VERSION.zip -O ./library.zip && \

|

|

||||||

unzip library.zip && \

|

|

||||||

mv docker-library-* w9library && \

|

|

||||||

rm -rf w9library/.github && \

|

|

||||||

wget $media_repo/archive/refs/tags/$MEDIA_VERSION.zip -O ./media.zip && \

|

|

||||||

unzip media.zip && \

|

|

||||||

mv media-* w9media && \

|

|

||||||

rm -rf w9media/.github && \

|

|

||||||

git clone --depth=1 https://github.com/swagger-api/swagger-ui.git && \

|

|

||||||

wget https://cdn.redoc.ly/redoc/latest/bundles/redoc.standalone.js && \

|

|

||||||

cp redoc.standalone.js swagger-ui/dist && \

|

|

||||||

git clone --depth=1 $websoft9_repo ./w9source && \

|

|

||||||

cp -r ./w9media ./media && \

|

|

||||||

cp -r ./w9library ./library && \

|

|

||||||

cp -r ./w9source/apphub ./apphub && \

|

|

||||||

cp -r ./swagger-ui/dist ./apphub/swagger-ui && \

|

|

||||||

cp -r ./w9source/apphub/src/config ./config && \

|

|

||||||

cp -r ./w9source/docker/apphub/script ./script && \

|

|

||||||

curl -o ./script/update_zip.sh $source_github_pages/scripts/update_zip.sh && \

|

|

||||||

pip install --no-cache-dir --upgrade -r apphub/requirements.txt && \

|

|

||||||

pip install -e ./apphub && \

|

|

||||||

# Clean cache and install files

|

|

||||||

rm -rf apphub/docs apphub/tests library.zip media.zip redoc.standalone.js swagger-ui w9library w9media w9source && \

|

|

||||||

apt clean && \

|

|

||||||

rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/* /usr/share/man /usr/share/doc /usr/share/doc-base

|

|

||||||

|

|

||||||

# supervisor

|

|

||||||

COPY config/supervisord.conf /etc/supervisor/conf.d/supervisord.conf

|

|

||||||

RUN chmod +r /etc/supervisor/conf.d/supervisord.conf

|

|

||||||

|

|

||||||

# cron

|

|

||||||

COPY config/cron /etc/cron.d/cron

|

|

||||||

RUN echo "" >> /etc/cron.d/cron && crontab /etc/cron.d/cron

|

|

||||||

|

|

||||||

# chmod for all .sh script

|

|

||||||

RUN find /websoft9/script -name "*.sh" -exec chmod +x {} \;

|

|

||||||

|

|

||||||

VOLUME /websoft9/apphub/logs

|

|

||||||

VOLUME /websoft9/apphub/src/config

|

|

||||||

|

|

||||||

EXPOSE 8080

|

|

||||||

ENTRYPOINT ["/websoft9/script/entrypoint.sh"]

|

|

||||||

|

|

@ -1,4 +0,0 @@

|

||||||

ARG APPHUB_VERSION

|

|

||||||

FROM websoft9dev/apphub:${APPHUB_VERSION} as buildstage

|

|

||||||

RUN mkdir -p /websoft9/apphub-dev

|

|

||||||

RUN sed -i '/supervisorctl start apphub/c\supervisorctl start apphubdev' /websoft9/script/entrypoint.sh

|

|

||||||

|

|

@ -1,11 +0,0 @@

|

||||||

# README

|

|

||||||

|

|

||||||

- Download docker-library release to image

|

|

||||||

- install git

|

|

||||||

- entrypoint: config git credential for remote gitea

|

|

||||||

- health.sh: gitea/portaner/nginx credentials, if have exception output to logs

|

|

||||||

- use virtualenv for pip install requirements.txt

|

|

||||||

- create volumes at dockerfile

|

|

||||||

- EXPOSE port

|

|

||||||

- process logs should output to docker logs by supervisord

|

|

||||||

- [uvicorn](https://www.uvicorn.org/) load Fastapi

|

|

||||||

|

|

@ -1 +0,0 @@

|

||||||

{"username":"appuser","password":"apppassword"}

|

|

||||||

|

|

@ -1 +0,0 @@

|

||||||

0 2 * * * /websoft9/script/update.sh

|

|

||||||

|

|

@ -1,45 +0,0 @@

|

||||||

[supervisord]

|

|

||||||

nodaemon=false

|

|

||||||

logfile=/var/log/supervisord.log

|

|

||||||

logfile_maxbytes=50MB

|

|

||||||

logfile_backups=10

|

|

||||||

loglevel=info

|

|

||||||

user=root

|

|

||||||

|

|

||||||

[program:apphub]

|

|

||||||

command=uvicorn src.main:app --host 0.0.0.0 --port 8080

|

|

||||||

autostart=false

|

|

||||||

user=root

|

|

||||||

directory=/websoft9/apphub

|

|

||||||

stdout_logfile=/var/log/supervisord.log

|

|

||||||

stdout_logfile_maxbytes=0

|

|

||||||

stderr_logfile=/var/log/supervisord.log

|

|

||||||

stderr_logfile_maxbytes=0

|

|

||||||

|

|

||||||

[program:apphubdev]

|

|

||||||

command=/websoft9/script/developer.sh

|

|

||||||

autostart=false

|

|

||||||

user=root

|

|

||||||

stdout_logfile=/var/log/supervisord.log

|

|

||||||

stdout_logfile_maxbytes=0

|

|

||||||

stderr_logfile=/var/log/supervisord.log

|

|

||||||

stderr_logfile_maxbytes=0

|

|

||||||

|

|

||||||

[program:cron]

|

|

||||||

command=cron -f

|

|

||||||

autostart=true

|

|

||||||

user=root

|

|

||||||

stdout_logfile=/var/log/supervisord.log

|

|

||||||

stdout_logfile_maxbytes=0

|

|

||||||

stderr_logfile=/var/log/supervisord.log

|

|

||||||

stderr_logfile_maxbytes=0

|

|

||||||

|

|

||||||

[program:media]

|

|

||||||

command=uvicorn src.media:app --host 0.0.0.0 --port 8081

|

|

||||||

autostart=true

|

|

||||||

user=root

|

|

||||||

directory=/websoft9/apphub

|

|

||||||

stdout_logfile=/var/log/supervisord.log

|

|

||||||

stdout_logfile_maxbytes=0

|

|

||||||

stderr_logfile=/var/log/supervisord.log

|

|

||||||

stderr_logfile_maxbytes=0

|

|

||||||

|

|

@ -1,17 +0,0 @@

|

||||||

#!/bin/bash

|

|

||||||

|

|

||||||

source_path="/websoft9/apphub-dev"

|

|

||||||

|

|

||||||

echo "Start to cp source code"

|

|

||||||

if [ ! "$(ls -A $source_path)" ]; then

|

|

||||||

cp -r /websoft9/apphub/* $source_path

|

|

||||||

fi

|

|

||||||

cp -r /websoft9/apphub/swagger-ui $source_path

|

|

||||||

|

|

||||||

echo "Install apphub cli"

|

|

||||||

pip uninstall apphub -y

|

|

||||||

pip install -e $source_path

|

|

||||||

|

|

||||||

echo "Running the apphub"

|

|

||||||

cd $source_path

|

|

||||||

exec uvicorn src.main:app --reload --host 0.0.0.0 --port 8080

|

|

||||||

|

|

@ -1,56 +0,0 @@

|

||||||

#!/bin/bash

|

|

||||||

# Define PATH

|

|

||||||

PATH=/bin:/sbin:/usr/bin:/usr/sbin:/usr/local/bin:/usr/local/sbin:~/bin

|

|

||||||

# Export PATH

|

|

||||||

export PATH

|

|

||||||

|

|

||||||

set -e

|

|

||||||

|

|

||||||

bash /websoft9/script/migration.sh

|

|

||||||

|

|

||||||

try_times=5

|

|

||||||

supervisord

|

|

||||||

supervisorctl start apphub

|

|

||||||

|

|

||||||

# set git user and email

|

|

||||||

for ((i=0; i<$try_times; i++)); do

|

|

||||||

set +e

|

|

||||||

username=$(apphub getconfig --section gitea --key user_name 2>/dev/null)

|

|

||||||

email=$(apphub getconfig --section gitea --key user_email 2>/dev/null)

|

|

||||||

set -e

|

|

||||||

if [ -n "$username" ] && [ -n "$email" ]; then

|

|

||||||

break

|

|

||||||

fi

|

|

||||||

echo "Wait for service running, retrying..."

|

|

||||||

sleep 3

|

|

||||||

done

|

|

||||||

|

|

||||||

if [[ -n "$username" ]]; then

|

|

||||||

echo "git config --global user.name $username"

|

|

||||||

git config --global user.name "$username"

|

|

||||||

else

|

|

||||||

echo "username is null, git config username failed"

|

|

||||||

exit 1

|

|

||||||

fi

|

|

||||||

|

|

||||||

regex="^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$"

|

|

||||||

if [[ $email =~ $regex ]]; then

|

|

||||||

echo "git config --global user.email $email"

|

|

||||||

git config --global user.email "$email"

|

|

||||||

else

|

|

||||||

echo "Not have correct email, git config email failed"

|

|

||||||

exit 1

|

|

||||||

fi

|

|

||||||

|

|

||||||

create_apikey() {

|

|

||||||

|

|

||||||

if [ ! -f /websoft9/apphub/src/config/initialized ] || [ -z "$(apphub getkey)" ]; then

|

|

||||||

echo "Create new apikey"

|

|

||||||

apphub genkey

|

|

||||||

touch /websoft9/apphub/src/config/initialized 2>/dev/null

|

|

||||||

fi

|

|

||||||

}

|

|

||||||

|

|

||||||

create_apikey

|

|

||||||

|

|

||||||

tail -n 1000 -f /var/log/supervisord.log

|

|

||||||

|

|

@ -1,51 +0,0 @@

|

||||||

#!/bin/bash

|

|

||||||

|

|

||||||

echo "start to migrate config.ini"

|

|

||||||

|

|

||||||

migrate_ini() {

|

|

||||||

|

|

||||||

# Define file paths, use template ini and syn exsit items from target ini

|

|

||||||

export target_ini="$1"

|

|

||||||

export template_ini="$2"

|

|

||||||

|

|

||||||

python3 - <<EOF

|

|

||||||

import configparser

|

|

||||||

import os

|

|

||||||

import sys

|

|

||||||

|

|

||||||

target_ini = os.environ['target_ini']

|

|

||||||

template_ini = os.environ['template_ini']

|

|

||||||

|

|

||||||

# Create two config parsers

|

|

||||||

target_parser = configparser.ConfigParser()

|

|

||||||

template_parser = configparser.ConfigParser()

|

|

||||||

|

|

||||||

try:

|

|

||||||

|

|

||||||

target_parser.read(target_ini)

|

|

||||||

template_parser.read(template_ini)

|

|

||||||

except configparser.MissingSectionHeaderError:

|

|

||||||

print("Error: The provided files are not valid INI files.")

|

|

||||||

sys.exit(1)

|

|

||||||

|

|

||||||

# use target_parser to override template_parser

|

|

||||||

for section in target_parser.sections():

|

|

||||||

if template_parser.has_section(section):

|

|

||||||

for key, value in target_parser.items(section):

|

|

||||||

if template_parser.has_option(section, key):

|

|

||||||

template_parser.set(section, key, value)

|

|

||||||

|

|

||||||

|

|

||||||

with open(target_ini, 'w') as f:

|

|

||||||

template_parser.write(f)

|

|

||||||

EOF

|

|

||||||

}

|

|

||||||

|

|

||||||

|

|

||||||